RANSAC optimization

This page is available for practice as an interactive jupyter notebook. In this task, we will optimise the implementation of RANSAC from the previous assignment. The runtime of the RANSAC algorithm depends on the required number of draws, and the time, the algorithm needs to process them. In this task, you shall improve the RANSAC algorithm of the last exercise under the aspect of complexity. Instead of drawing random points draw points from the neighborhood:

- Choose one point $p_1$ randomly

- Draw point $p_2$ and $p_3$ in the neighborhood of $p_1$

- Consider threshold!

- Min radius to avoid effects by noise

- Max radius in order not to draw $p_2$ and $p_3$ from another plane.

- Use Kd-tree for range search

# imports

from plyfile import PlyData

import numpy as np

from imageio import imread

import math

# YOUR CODE HERE

raise NotImplementedError()

# implement improved RANSAC

# implement RANSAC

def ransac_opt(points, z, w, max_dif, min_raius, max_radius):

'''Finds the normal vectors of the planes in a scene

:params:

z: likelihood, that plane can be found

w: outliers in percent

t: treshold for accepted observations

min_radius: min distance of p_2 and p_3 from p_1

max_radius: max distance of p_2 and p_3 from p_1

:return:

n: - normalized normal vector

d: - distance from origin

new_points: - list - Consensual points

outliers: - list - Outlier points

'''

# YOUR CODE HERE

raise NotImplementedError()

return n_max, d_max, max_points, outliers

# load data

_data = PlyData.read('schneiderberg15cm_1.ply').elements[0].data

schneider1 = np.stack((_data['x'], _data['y'], _data['z']), axis=1)

_data = PlyData.read('schneiderberg15cm_2.ply').elements[0].data

schneider2 = np.stack((_data['x'], _data['y'], _data['z']), axis=1)

Compare

Use suitable values for the minimum and maximum point distance. Detect in both scenes the same planes as in the last exercise and compare the results. How does the runtime improve? Which parameters did you use and how many iterations were required?

# YOUR CODE HERE

raise NotImplementedError()

Generation of a point cloud from a distance image

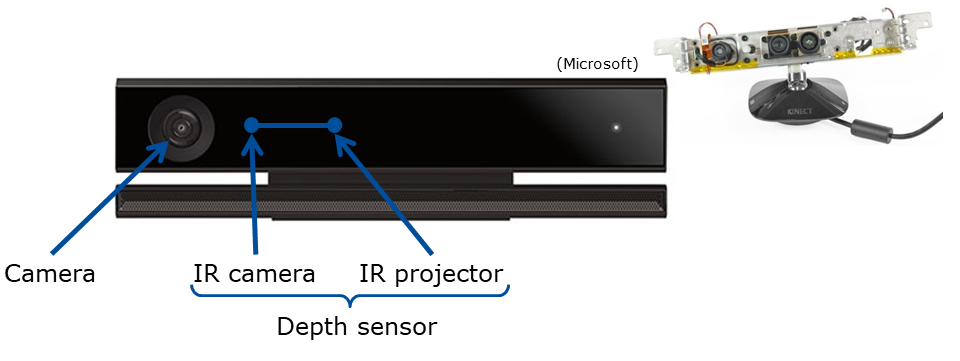

In the following task we will generate 3D point cloud from a distance image. Given is the distance image in file ‘depthImage.PNG’. It has been generated with a Microsoft Kinect v2, which originally has been developed for Microsoft Xbox. Kinect combines depth sensor, camera and a 3D microphone. The infrared projector beams a (known) structured pattern which is measured by the infrared camera.

Microsoft Kinect v2

Our Kinect has following parameters:

- Resolution of the depth camera: 512 x 424 px

- Measurement distance: 0.5-4.5 m

- Opening angle depth camera

- 70° horizontal

- 60° vertical

- Focal distance f: 365.5953 px

- Center point

- cx: 260.1922

- cy: 209.5835

Calculation

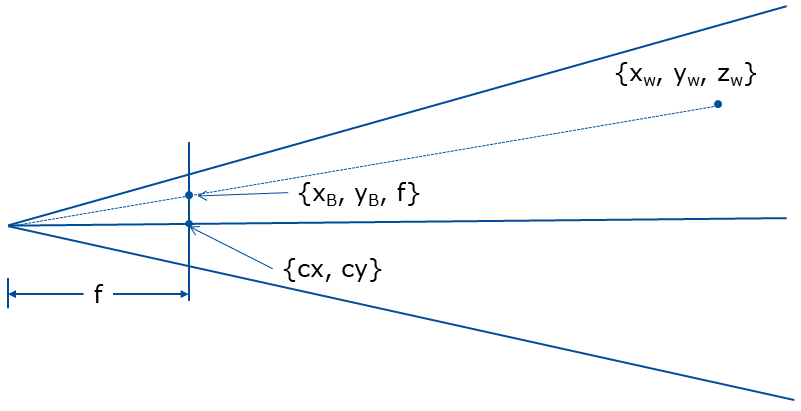

We are given $cx$, $cy$ the coordinates of the image center and $f$ the focal length. Based on geometry of the sensor sketched below we can compute the $x_w,y_w,z_w \in \mathbb{R}$ real world coordinates from $x_b,y_b \in \mathbb{N}$ from the discrete depth image coordinates and $d \in \mathbb{R}$ distance measured for a certain pixel $x_b,y_b$.

$\LARGE y_w=d$, $\LARGE x_w=\frac{(x_b-cx)y_w}{f}$ and $\LARGE z_w=\frac{(x_b-cy)y_w}{f}$

def depth_to_world(f, cx, cy, image):

""" Converts depth raster to 3D coordinates

f: Focal distance

(cx, cy): center point

image: 2D Raster with depth values stored at each cell

return: numpy array of 3d points

"""

# YOUR CODE HERE

raise NotImplementedError()

return points

# load image

img = imread('depthImage.PNG')

Expected outcome of the conversion looks like this:

| Author: | Artem Leichter |

| Last modified: | 2019-04-30 |