Hardware for ML

In computer science, there is a large number of problems which can be condensed to the format of inferring new information from given input. For example, there is an application like identify a car in an image or classification of an email as spam. To solve these problems we need some function which takes input and maps it to our wanted output. Classical approach would be to implement this function and define some rules, which would be like “If the image contains black circles/ellipses (tires) then there is a car in it” or “If a mail contains more then 20 spelling mistakes then it is spam”. Honestly, last rule would make almost impossible for me to use emails and the first one isn’t perfect either. It is easy to implement a solution if there is a well integrated model of a problem. Simple example: If we are given a 2D circle defined by the radius and middle point it easy to determine whether a new point is within the circle or not. We would just calculate the Euclidean distance to the middle and compare it with the radius. But what if there is no suitable model for our problem. We could obviously try to develop one (if it’s even possible). That would cost us development time, which is expensive and we are short of developers anyway. Another option is to stick to data driven development.

Data driven approach is focused on approximating a suitable model based on examples aka instances, which are pairs of input and expected outcome. (This is pretty oversimplified, there are other strategies as well, like unsupervised learning) In an iterative process called machine learning (ML), we use lots of calculating capacity and tons of data to determine an approximate model for the given problem. The resulting model is not perfect its just working in most of the cases. Most of you received emails with granulation for winning a lottery and thought: “It is so obvious a spam mail”.

A typical ML pipeline consists of four parts. Preprocessing is almost always the first step in which the data is prepared for a certain ML algorithm. This could be bringing images to a certain size or removing stop words from natural language data like email messages. Training refers to the algorithm which iteratively approximates the model, and it is the main and most calculation intensive part. Trained model tested with data which was not part of the training process and for which we have the expected outcome. If the model performs well(low error) on this unknown data than we can assume it can be applied on fresh data for which we want to estimate particular information.

The Central processing unit (CPU) is a general purpose processor which is good in executing various commands. TA CPU can process the whole ML pipeline. Most of the algorithms like support vector machines (SVM) or decision trees can be used without any further problems with CPU. (There any way GPU accelerated implementations of boost trees) This situation changes when we talk about Deep Learning (DL), the class of algorithms based on deep neural networks. In the last Time there have been much success tied to DL, and therefore it is really popular among the machine learners. DL changes the scale of the computational task.

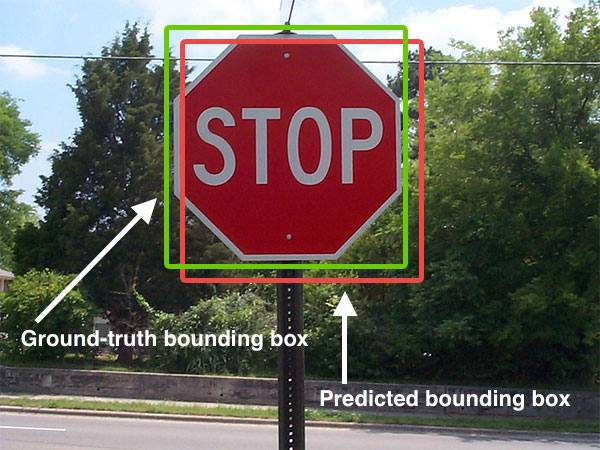

By Adrian Rosebrock - http://www.pyimagesearch.com/2016/11/07/intersection-over-union-iou-for-object-detection/, CC BY-SA 4.0, Link

Let us take a look to typical DL application. The Large Scale Visual Recognition Challenge (ILSVRC) is a contest where participants solve task like classification, localization and segmentation of images. The data set consists of 1 0000 000 images and 1 000 categories. Successful models in this context are deep neural networks. One of the networks successful on this task is ResNet-50 which contains ca. 25 000 000 parameters which have to be trained. Training and application of deep neural networks is based on matrix multiplication and convolution in 25 million parameters dimension. Such calculation are only possible in a reasonable time if executed on a system capable of parallelising and executing fast, simple instructions.

Progress in machine classification of images: the error rate (%) of the ImageNet competition winner by year.

Red line - the error rate of a trained human annotator (5.1%).

By Sandegud - Own work, CC0, Link

This is the point where Graphical Processing Units (GPUs) come to the aid. This popular commodity hardware used mostly for graphical visualization and is specialized on parallel and fast execution of matrix operations like. GPU accelerated neural network implementation are one of the crucial reason why deep neural networks are feasible. GPU achieve a much higher number of floating point operations (FLOPS). Consider a multiplication of two decimal numbers as a single Flop. consequently a multiplication of two 2 x 2 matrix leads to 8 multiplications, 4 additions and therefore at least 12 FLOPS. GPU implementations of deep learning models allow to accelerate not only the training step of the ML pipeline but although the validation and application step. This helps us to use large validation sets like one million of images and 10 % of it are still 100 thousand images in validation set. When applying trained models we may be interested in real time performance or want apply the model to large dataset.

Performance overview of Nvida GPUs.

By User:Elizarars - Own work, CC BY-SA 3.0, Link

There are alternatives to GPUs like specialized ML hardware Tensor Processing Unit (TPU). Never the less GPU is highly available commodity hardware. Especially for Nvidia graphic cards there large number of libraries for machine learning which make them de facto standard for ML.